In Part 1 and Part 2 of this blog series, I demonstrated how to build and install a bare metal Kubernetes cluster on the Hetzner Cloud platform. In this post I am going to demonstrate how to take the cluster to the next level and get a deployed component onto the cluster with ingress traffic routed to the component.

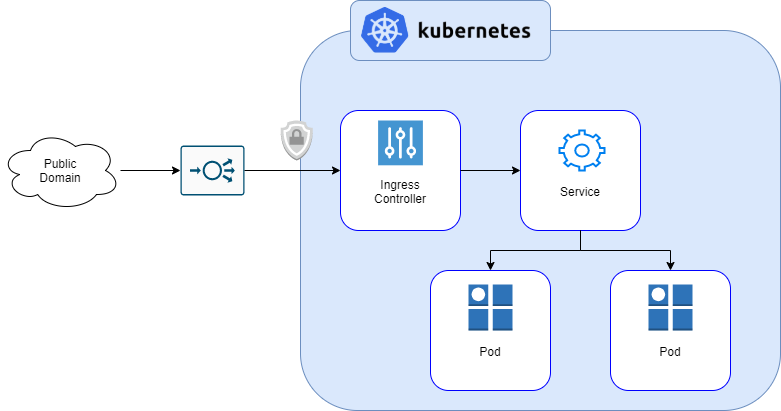

The big picture

The above image demonstrates my intentions with the architecture of the cluster. This can be further described as follows:

- External user will navigate to a public domain.

- The public domain will be secured with a HTTPS certificate.

- DNS will direct the traffic from the public domain to a load balancer.

- The load balancer will route the HTTPS traffic to an ingress controller.

- The ingress controller will terminate the SSL traffic and route the HTTP traffic to a service.

- The service will route the HTTP traffic to the pod that is running the application.

A service mesh will also be used to secure the internal cluster communication by using TLS. This is important as we are terminating the HTTPS traffic at the ingress controller.

Service Mesh

There are several choices when it comes to implementing a service mesh on Kubernetes. One of the most popular choices is Istio which is widely used and provides some great features such as Kiali. However, I have opted to use LinkerD as I find it is far simpler to setup and much less opinionated. Furthermore, the documentation and support ecosystem for LinkerD is superb.

Another deciding factor in choosing LinkerD is that in my initial evaluation of the different service mesh technologies on offer, I found that Istio would not support ACME HTTPS certificates whereas LinkerD will. This may have changed more recently but I have stuck with LinkerD as I like the experience of using it.

Installing LinkerD is simple, install the CLI by following the documentation and the run the following command:

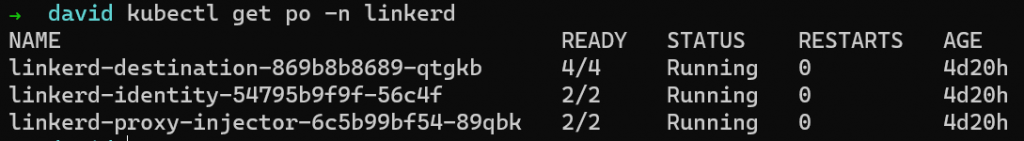

linkerd install | kubectl apply -f -There are other options for installation such as a Helm chart which would be used for real world deployments. Once LinkerD has been installed, you should see the pods running within your cluster. E.g.

LinkerD has various components that you can choose to install and expose such as a Dashboard and Grafana. I am not going to cover these features here but they are well documented on the LinkerD website.

Cert-Manager

Cert-Manager will allow us to automatically generate, and renew, HTTPS certificates for our public facing services. This process is known as ACME and you can read about it in the documentation.

To enable this functionality, we must install Cert-Manager onto our cluster. You can use the following Helm command to do this:

helm repo add cert-manager https://charts.jetstack.io

helm repo update

helm install cert-manager cert-manager/cert-manager \

--version v1.7.0 \

--namespace cert-manager \

--create-namespace \

--set installCRDs=true \

--set 'extraArgs={--dns01-recursive-nameservers-only,--dns01-recursive-nameservers=8.8.8.8:53\,1.1.1.1:53}'The last set of arguments are important for enabling ACME to work correctly with Hetzner. The Hetzner Cert-Manager Webhook (see below) will use a DNS challenge when generating certificates. These arguments allow the DNS names to be resolved correctly. The following articles explain this in more detail:

Sometimes the problem is DNS (on Hetzner) (vadosware.io)

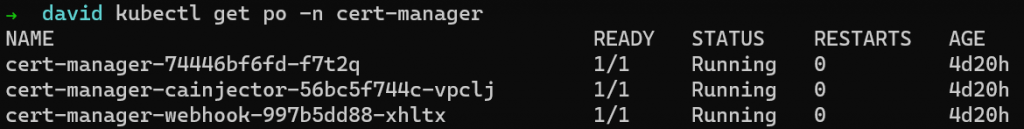

After the above Helm command has been executed, you should see the cert-manager pods running in the cluster.

Cert-Manager Hetzner Webhook

Before we install the webhook, we must create a secret that enables the webhook to talk to the Hetzner DNS. It is important to note that for the webhook to work correctly, your domain needs to be managed by Hetzner DNS.

Create the following secret, replacing the api-key with one generated from the Hetzner DNS Console. You also need to Base64 encode the key:

apiVersion: v1

kind: Secret

metadata:

name: hetzner-secret

namespace: cert-manager

type: Opaque

data:

api-key: <<YOUR API KEY>>We also need to create a ClusterIssuer resource which will be responsible for generating the certificates. In the following example the letsencrypt-staging provider is used. For production, you can replace this with letsencrypt-prod.

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

# The ACME server URL

# For production, use https://acme-v02.api.letsencrypt.org/directory

server: https://acme-staging-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: mail@example.com # REPLACE THIS WITH YOUR EMAIL!!!

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-staging

solvers:

- dns01:

webhook:

# This group needs to be configured when installing the helm package, otherwise the webhook won't have permission to create an ACME challenge for this API group.

groupName: acme.yourdomain.tld

solverName: hetzner

config:

secretName: hetzner-secret

zoneName: example.com # Replace with your domain

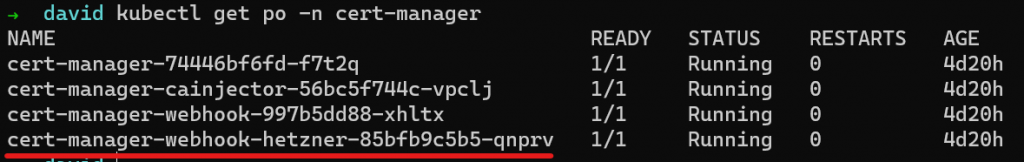

apiUrl: https://dns.hetzner.com/api/v1We can now install the webhook using the following helm command, replacing your domain name:

helm repo add cert-manager-webhook-hetzner https://vadimkim.github.io/cert-manager-webhook-hetzner

helm repo update

helm install cert-manager-webhook-hetzner cert-manager-webhook-hetzner/cert-manager-webhook-hetzner \

--namespace cert-manager \

--set groupName=acme.example.com Once the Helm command has been executed, you should now see the webhook pod running in the cluster:

Ingress Controller

There are several choices when it comes to Ingress Controllers, some examples include Nginx, Istio and Kong. I have opted to use Kong as it is open source and simple to setup and configure. Furthermore, there are lots of plugins available to enhance functionality.

When installing Kong, provided we have specified the correct configuration, a new Load Balancer will be created on the Hetzner Cloud platform. Create a new file named kongvalues.yaml with the following content:

deployment:

daemonset: true

ingressController:

installCRDs: false

proxy:

externalTrafficPolicy: Local

annotations:

load-balancer.hetzner.cloud/name: setup-example

load-balancer.hetzner.cloud/location: nbg1

load-balancer.hetzner.cloud/type: "lb11"

load-balancer.hetzner.cloud/disable-private-ingress: "true"

load-balancer.hetzner.cloud/uses-proxyprotocol: "false"

load-balancer.hetzner.cloud/use-private-ip: "true"

podAnnotations:

config.linkerd.io/skip-inbound-ports: 8443We must specify that Kong should be installed as a deamonset rather than a standard deployment. This ensures that the load balancer can perform health checks on all nodes within the cluster.

The pod annotation for LinkerD specifies that traffic on port 8443 should not go through the LinkerD proxy. Traffic will be incorrectly routed without this setting.

In my setup I am using an instance of Kong per environment. For example, I will be using namespaces for each environment; DEV and UAT. This means that a load balancer will be created per environment. The following command is used to install Kong into each environment:

helm install kong-dev kong/kong \

--namespace myproject-dev \

--create-namespace \

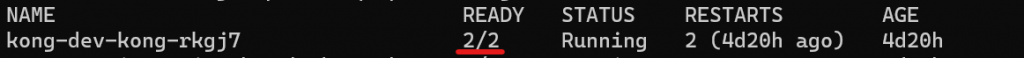

-f kongvalues.yamlOnce the Helm command has been successfully executed, you should see the Kong pods running in the cluster. Notice the pod count, I have two nodes in this example:

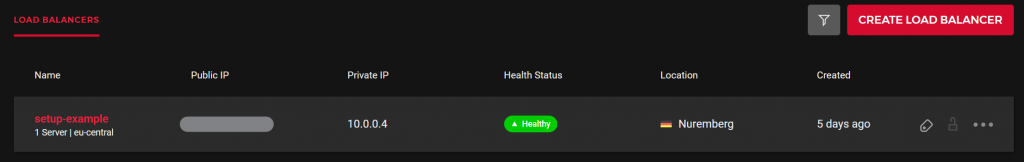

If you check back in the Hetzner Cloud console, you should also see a newly created load balancer:

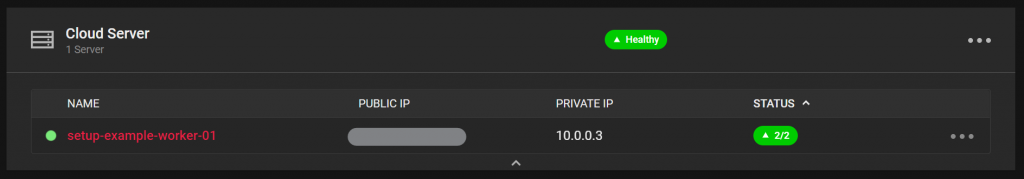

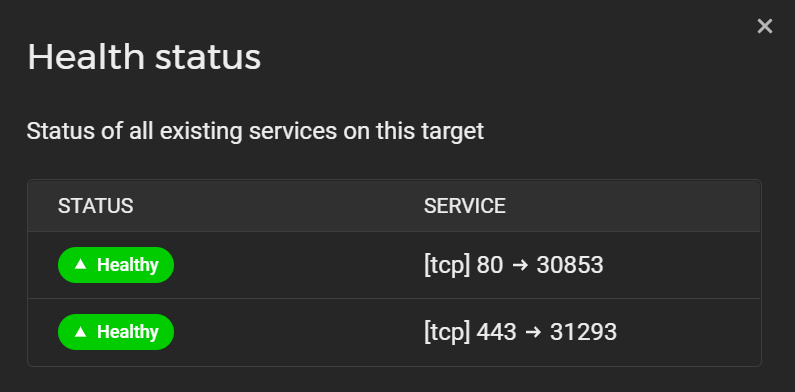

We can see from the above that the Health Status is healthy. This is because we installed Kong as a daemonset. If you omit this configuration, the health status will be “Mixed” as only one node will be reporting itself as healthy. We can see further details of the health check, although in this example there is just a single node:

We can also see the corresponding load balancer type service running on our Kubernetes cluster:

Deploying a Component

The final piece of the puzzle is to deploy a component that joins all of these features together. For my purposes, I have a React Application that is built and packaged via a Azure DevOps pipeline. The artifacts generated by the build are a Helm Chart and a Docker Image. I use Azure Container Registry to host these artifacts but you could equally use DockerHub or similar. This component will be referred to as presentation-main.

I am not going to detail everything about my component and the build process here, instead I am just going to focus on the important parts.

KongPlugins

For Kong and LinkerD to work together correctly we must use some KongPlugins to transform the request and response. You can read about why this is necessary here. The following two plugins need creating:

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: presentation-main-linkerd-request-header

namespace: myproject-dev

plugin: request-transformer

config:

add:

headers:

- l5-dst-override:presentation-main.myproject-dev.svc.cluster.local

---

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: presentation-main-linkerd-response-header

namespace: myproject-dev

config:

append:

headers: []

plugin: response-transformerIngress

An Ingress needs to be created to handle traffic from the load balancer and distribute it to services in the cluster. The following ingress will instruct Cert-Manager to create certificates for the hosts specified. I also add an annotation to force any calls to HTTP to be redirected to HTTPS. Once traffic is received by the ingress, the HTTPS traffic is terminated and passed onto the relevant service on port 80.

NOTE – You must handle the domain to public IP address mapping as a prerequisite. It is up to you to create the A records for your domain that point to the public IP address of the load balancer.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: presentation-main

namespace: myproject-dev

annotations:

cert-manager.io/cluster-issuer: "letsencrypt-prod"

konghq.com/plugins: presentation-main-linkerd-request-header,presentation-main-linkerd-response-header

ingress.kubernetes.io/force-ssl-redirect: "true"

labels:

environment: dev

component: ingress

spec:

ingressClassName: kong

tls:

- hosts:

- www.example.com

- example.com

secretName: presentation-main-cert-dev

rules:

- host: www.example.com

http:

paths:

- path: "/"

pathType: Prefix

backend:

service:

name: presentation-main

port:

number: 80

- host: example.com

http:

paths:

- path: "/"

pathType: Prefix

backend:

service:

name: presentation-main

port:

number: 80Service

Traffic from the ingress will be routed to a service with the name presentation-main on port 80. This service is defined as follows:

apiVersion: v1

kind: Service

metadata:

name: presentation-main

namespace: myproject-dev

labels:

environment: dev

component: presentation-main

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: presentation-mainDeployment

The service above will handle traffic and forward it onto a pod with the app value of presentation-main and use port 80. The deployment resource below will create that pod within the cluster:

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: myproject-dev

name: presentation-main

spec:

selector:

matchLabels:

app: presentation-main

replicas: 1

template:

metadata:

labels:

app: presentation-main

environment: dev

component: presentation-main

spec:

containers:

- name: presentation-main

image: <<URL OF CONTAINER LIBRARY AND VERSION>>

imagePullPolicy: Always

ports:

- containerPort: 80

envFrom:

- configMapRef:

name: presentation-main

imagePullSecrets:

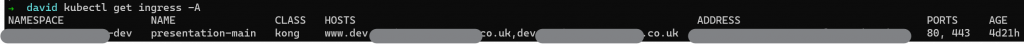

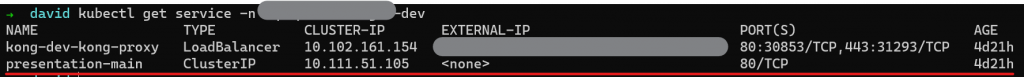

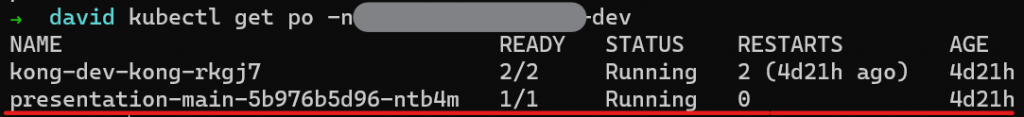

- name: docker-cfgOnce all of these resources have been created, we can view them within the cluster:

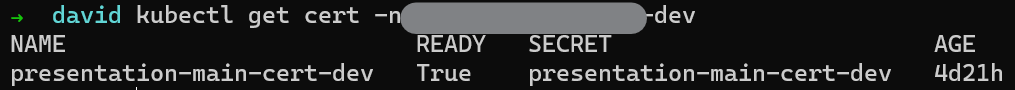

We can also see the generated HTTPS certificates:

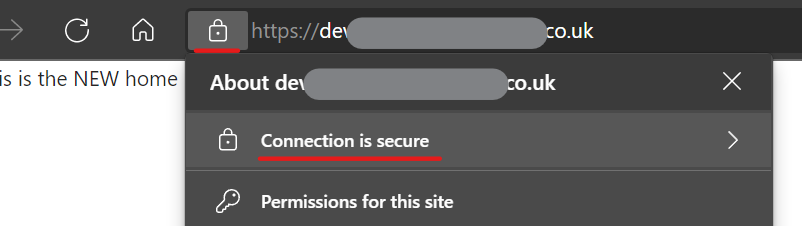

If we navigate to our site, HTTPS redirection will be enabled and the HTTPS certificate will be valid. The certificate will automatically renew every 90 days.

Conculsion

By using Hetzner’s cloud and DNS offerings I hope that you have seen that it is possible to build a cost effective Kubernetes cluster in the cloud. We can utilise technologies such as Service Mesh, Ingress and HTTPS Certificate generation to provide a really neat solution.

I hope that you have enjoyed reading this blog series and that it helps you on your journey to building your own Kubernetes cluster. At the time of writing, there was no single place that explained these concepts and took me days of research and trial and error to get everything working together correctly. I would love to hear your comments below and will do my best to answer any questions you may have.