The Problem

I have recently moved a lot of my infrastructure from Azure over to DigitalOcean (DO) as it is much more cost effective. One of the biggest cost savers was changing from an Azure SQL Database to a MySQL Cluster.

My Azure SQL Database was costing me around $10 per month for a single database that was really small and hardly used. I setup an entire MySQL cluster on DO that allows as many databases as I would like within the specification of my cluster. At the time of writing, the cost for a single node cluster with 1GB RAM, 1vCPU and 10GB storage is $15 per month.

One issue I had with the MySQL cluster is that I had to leave connections to the outside world open. Until recently, you could only secure your cluster to other droplets within DO. This did not include K8S worker nodes or external IP addresses. The resources required to access the cluster are as follows

- K8S worker nodes running in DO.

- Within my own network for development and administration.

- Azure DevOps Build Agents

Securing the DO MySQL Cluster

DO recently updated the security policy to allow both K8S worker nodes and external IP addresses. With this in mind I set myself a task to lock down my database cluster.

The first two of my requirements were simple and easy to achieve. The access from Azure Devops Pipelines was not so easy. I did some investigation as to whether I could get the IP range used by Azure build agents and it turns out that this is possible.

The problem with this is that there are around 50 IP ranges per region and they can change on a weekly basis. I really did not want to be changing this data regularly. I decided to try and setup my own self-hosted Azure Pipelines build agent using a DO droplet running Ubuntu.

Creating a Self-Hosted Build Agent on Azure DevOps

It turns out that this is relatively easy to achieve. The following link details the process of setting up your Linux machine as a build agent.

https://docs.microsoft.com/en-us/azure/devops/pipelines/agents/v2-linux?view=azure-devops

Challenges

Running Installation as sudo User

One issue that I had was when running the ./config.sh script, it would give me the following error:

Must not run with sudo

A bit of google research led me to the following discussion which suggested that I could set an environment variable to override this check and allow me to run the script as sudo. I didn’t really want to have to create another user for this.

https://github.com/Microsoft/azure-pipelines-agent/issues/1481

After setting the environment variable ALLOW_RUNASROOT, giving it a value of 1 I tried again. I still had the same error. After quite a lot of investigation, I came across the following discussion which highlighted that the environment variable was incorrect. It should have been named AGENT_ALLOW_RUNASROOT.

https://github.com/bbtsoftware/docker-azure-devops-agent/pull/5

This worked perfectly and allowed me to setup the agent and continue with the installation as per the Microsoft article. I opted to start the agent via systemd so that it would always be running in the background. Otherwise I would have had to leave a console open.

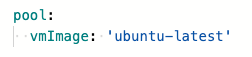

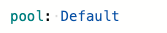

I updated one of my build jobs to use my agent rather than a Microsoft hosted one to try this out. This just required changing the YAML script as follows:

When triggering a build on my newly provisioned agent, I next ran into an issue with Docker. The pipeline in question builds a docker image from my component and pushes it to docker hub.

Using Docker on the Build Agent

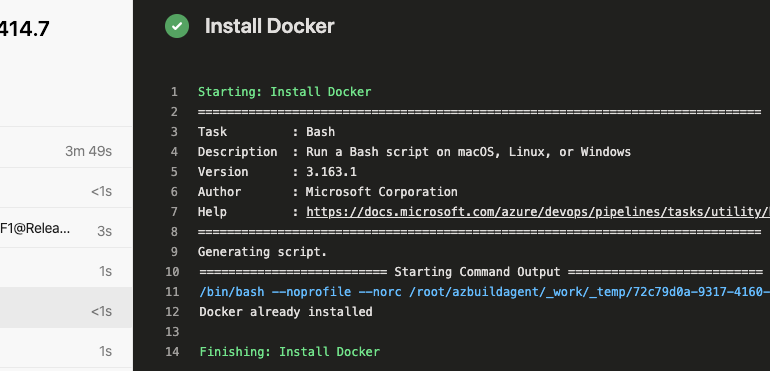

Azure Pipelines jobs have a task available named “Docker CLI Installation” so I decided to try that. While this task succeeded, the docker build still failed with the following error:

##[error]Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

It would appear that the Docker CLI installation task is not enough to install and startup Docker on the build agent. I tried a few other ways to get this working with little success and in the end I opted for a custom BASH script to do this. The step in my YAML template is as follows:

- task: Bash@3

displayName: "Install Docker"

inputs:

targetType: 'inline'

script: |

# Write your commands here

if [[ $(which docker) && $(docker --version) ]]; then

echo "Docker already installed"

else

curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

sudo systemctl enable docker

fiThis script will download and install docker, provided that it has not already been installed. It also starts docker as a service on the build agent. With this in place, my pipeline worked exactly as it did before on a Microsoft hosted agent.

Conclusion

I have now successfully locked down my database cluster to only droplets in my DO account and my own IP address. I can sleep a little easier at night now 🙂

While implementing this solution, I found another benefit to using a self-hosted build agent is NuGet package caching. When using a hosted agent, you get a new machine every time. This means that all NuGet dependencies need to be installed on every build. Using a self-hosted build agent results in the same machine being used each time, assuming you only have one. The first build took slightly longer but subsequent builds are running really quick due to the NuGet dependencies already being present on the build agent. This was a nice serendipitous moment.

I hope you find this information useful and that it saves you some time in getting a self-hosted build agent up and running. Please feel free to leave me a comment or question below.